August 12, 2021

Python

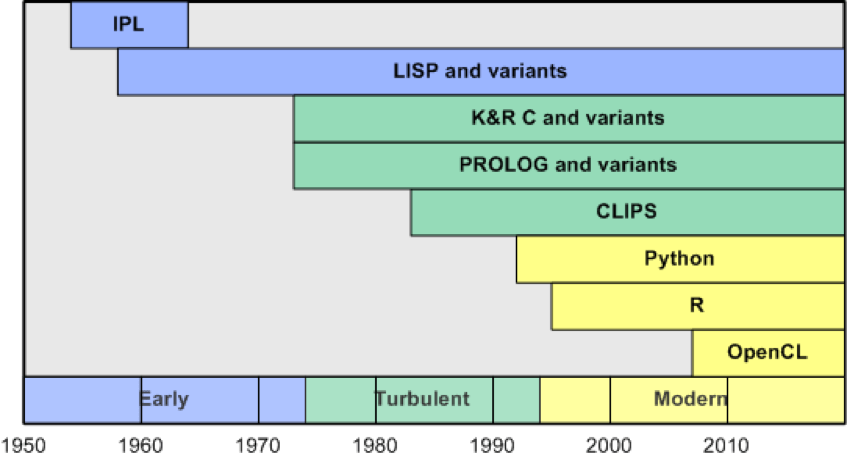

Before Python, these were the languages used for AI

Today, learning Artificial Intelligence has almost become synonymous with learning to program in Python. This programming language created by Guido Van Rossum in 1991 is, by far, the most used today in artificial intelligence projects, especially in the field of ‘machine learning’.

It helps, in addition to its popularity as a generalist programming language (and also in related fields like data analysis), that all the major AI libraries (Keras, TensorFlow, SciPy, Pandas, Scikit-learn, etc.) are designed to work with Python.

However, Artificial Intelligence is much older than Python, and there were other languages that excelled in this field for decades before it landed. Let’s take a look at them:

IPL

The Information Processing Language (IPL) is a low-level language (almost as much as the assembler) that was created in 1956 in order to show that the expressor theorems in the ‘Principia Mathematica’ by mathematicians and philosophers Bertrand Russell and Alfred North Whitehead, could be tested by turning to computers.

IPL introduced into the programming features that are still entirely in force today, such as symbols, recursion, or the use of lists. The latter, a data type so flexible that it, in turn, allowed a list to be entered as an element of another list (which in turn could join another list as an element, etc.). It was essential when using it to develop the first programs of AI, such as Logic Theorist (1956) or the NSS chess program (1958).

Despite its importance in the history of AI languages, several factors (the first being the complexity of its syntax) caused it to be quickly replaced by the following language on the list.

LISP

LISP is the oldest programming language dedicated to artificial intelligence among those still in use; it is also the second high-level programming language in history. It was created in 1958 (one year after FORTRAN and one before COBOL) by John McCarthy, who two years before had already been responsible for coining the term ‘artificial intelligence ‘.

McCarthy had shortly before developed a language called FLPL (FORTRAN List Processing Language), an extension of FORTRAN, and decided to compile in the same language the high-level nature of FLPL, all the novelties provided by IPL, and the formal system known as lambda calculus. The result was named LISP (from ‘LISt Processor’).

At the same time, he was developing FLPL. McCarthy was also formulating so-called ‘alpha-beta pruning’, a search technique that reduces the number of nodes evaluated in a game tree. And, to implement it, he introduced such a fundamental element in programming as if-then-else structures.

Programmers quickly fell in love with the freedom offered by the flexibility of this language, and with its role as a prototyping tool. Thus, for the next quarter-century, LISP became the reference language in the field of AI. Over time, LISP fragmented into a whole series of ‘dialects’ still valid in various computing fields, such as Common LISP, EMACS LISP, Clojure, Scheme, or Racket.

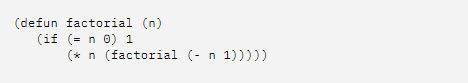

The following example illustrates a LISP function to calculate the factorial of a number. In the code snippet, notice the use of recursion to calculate the factorial (calling factorial within the factorial function). This function could have been invoked with (factorial 9).

In 1968, Terry Winograd developed a state-of-the-art program at LISP called SHRDLU that could interact with a user in a natural language. The program represented a world block.

The user could interact with that world, directing the program to consult and interact with the world using statements such as “lift the red block“ or “can a pyramid be supported by a block?” This demonstration of natural language planning and understanding within a simple physics-based blocky world provided considerable optimism for AI and the LISP language.

PROLOG

The PROLOG language (from the French ‘Programmation en logique’), was born at a difficult time for the development of artificial intelligence; on the threshold of the first ‘AI Winter’. A time when the initial fury for the applications of this technology crashed against skepticism caused by the lack of progress, which generated public and private disinvestment in its development.

Specifically, it was created in 1972 by the French computer engineering professor, Alain Colmeraurer to introduce Horn’s clauses, a formula of propositional logic, into software development. Although globally, it never became as widely used as LISP, it did become the primary language of AI development in its home continent (as well as in Japan).

Being a language based on the declarative programming paradigm – like LISP, on the other hand – its syntax is very different from that of typical imperative programming languages such as Python, Java, or C ++.

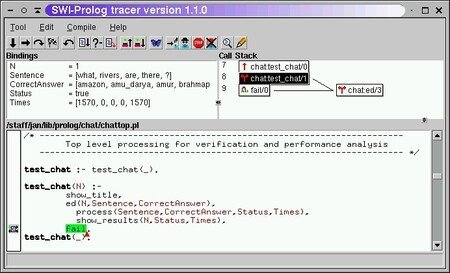

PROLOGUE’s ease in handling recursive methods and pattern matching caused IBM to bet on implementing PROLOG in its IBM Watson for natural language processing tasks.

PROLOG code example in the IDE SWI-Prolog.

The last 60 years have seen significant changes in computing architectures and advances in AI techniques and applications. Those years have also seen an evolution of AI languages, each one with its own functions and approaches to problem-solving. But today, with the introduction of big data and new processing architectures that include CPUs clustered with GPU arrays, the foundation has been laid for a host of innovations in AI and the languages that power it.

How cool is it to know this info about AI languages, right? But we have something even cooler for you! And this is an amazing opportunity to join Silicon Valley’s top IT companies. 👇

Upload your CV here if you don’t find a position that fits your profile.